The HANDS project used Computer Vision (CV) and Fast Fourier Transforms (FFT) to produce visuals accompanying Ravel’s Mother Goose suite.

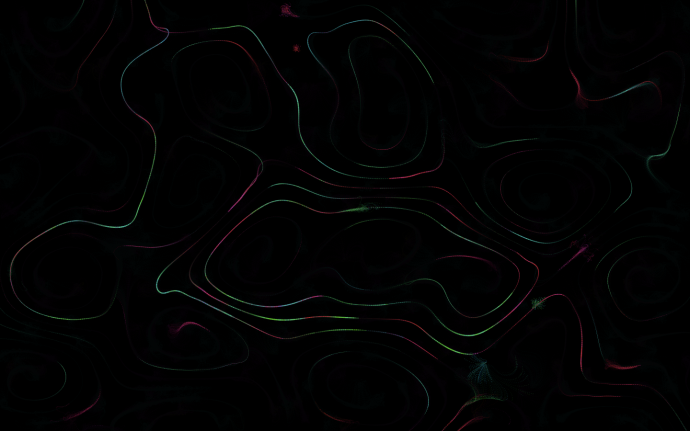

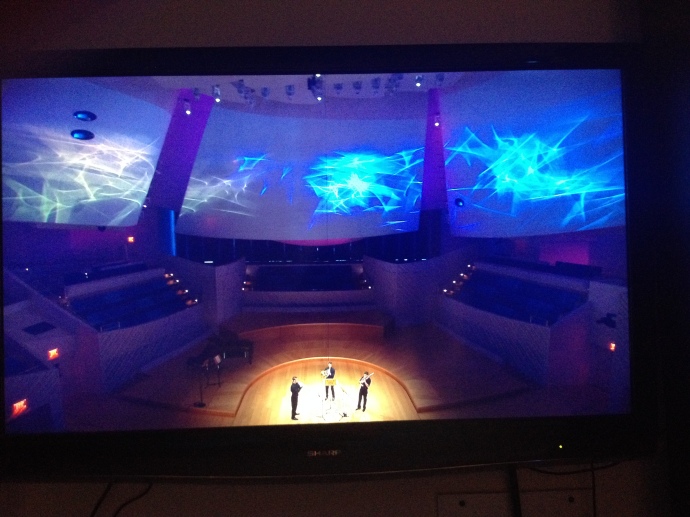

Originally inspired by a collaboration between Luke Dubois and Kathleen Supove called digits, I wanted to use CV to utilize the gesture by the hands of the performer to drive the visuals. The final form of the piece shown in the center involved using optical flow, which indicates velocities & directions of moving parts of the image, for two purposes: 1) adding forces to a slowly fading vector field and 2) spawning particles in those positions with initial velocities based on movement which then flow through said vector field. The appearance of the particles is altrered throughout the performance such that they begin so small that they are not visible, and slowly increase in size to appear as white shimmering dust. They gradually evolve into large forms which become colored, taking their hue from the direction they move.

Further, CV frame differencing is also used to detect moving parts of the image, though shown in a different visual form. Moving pixels are detected at the edges of the performer’s silhouette and used to fill a CV image. This image is then blurred to soften the outline and faded over time. This produces a nice ghosting effect. The actual video was also modified. Some combination of brightness reduction and blurring was applied to the video before drawing it underneath the previously mentioned effects. All the pieces worked in tandem to produce a gentle accompaniment for the music.

On the side sails, a separate visual system was utilized which used particles slowly radiating out from a center point. FFT was used to shift the colors between blue and red based on low and high frequencies, and the brightness was based on the volume of the audio stream. This system was repeated 5 times to form what began as a ring and evolved into a more nebulous cloud of organic shapes.

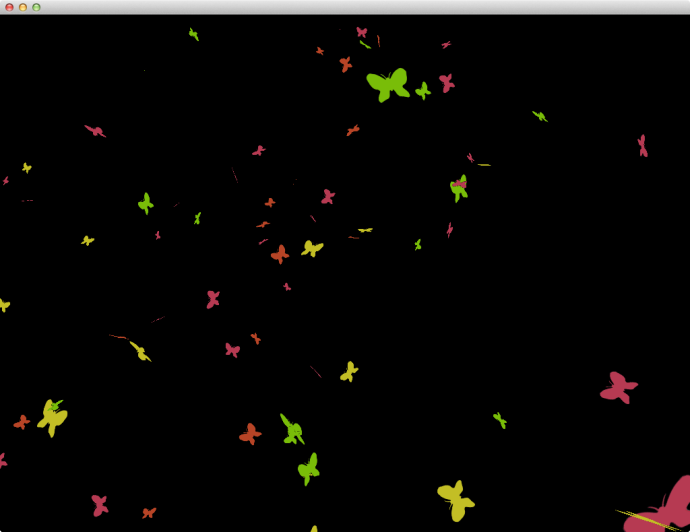

Several other looks were developed but ultimately not used during the performance. These included a particle system which began as a firework burst and but quickly morphed into trails of particles as forces, based on Perlin noise, were added to each particle to derive its path. Another look involving images of butterflies rather than simple circles was explored.

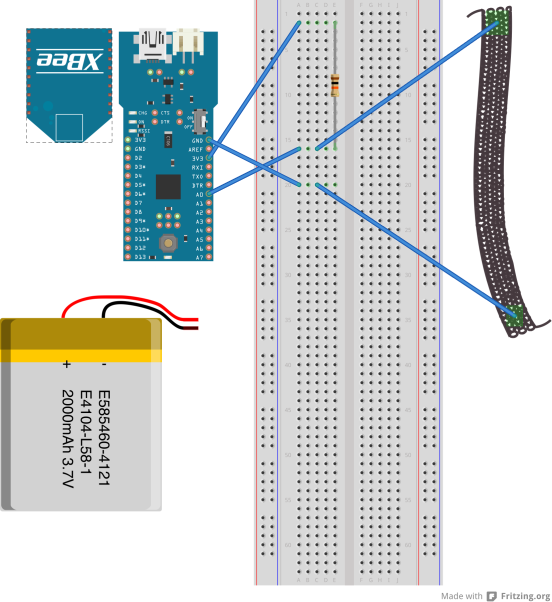

All code is available in different folders on the NWS 2013 github repo.